Setting up Kubernetes for container orchestration might seem daunting at first, but understanding the fundamental concepts and following a systematic approach will transform this complex task into a manageable learning journey. Before we start to set up Kubernetes and the technical aspects, let’s explore what you already know about containerization and orchestration concepts.

Have you worked with Docker containers before? What challenges have you encountered when managing multiple containers across different environments? Understanding your starting point will help us tailor this guide to build upon your existing knowledge effectively.

Understanding Kubernetes Architecture: The Foundation

Kubernetes setup requires grasping its core architecture components. Think of Kubernetes as a sophisticated conductor orchestrating a complex symphony of containers across multiple servers.

The master node serves as the control plane, housing critical components like the API server, etcd database, scheduler, and controller manager. Worker nodes run your actual applications through kubelet agents, kube-proxy for networking, and container runtime engines.

Consider this analogy: if containers are individual musicians, Kubernetes acts as both the conductor and the concert hall management system, ensuring every musician knows their part, has the right resources, and performs in harmony with others.

Prerequisites and Environment Preparation

Before beginning your Kubernetes setup, ensure your environment meets specific requirements:

Component | Minimum Requirement | Recommended |

CPU | 2 cores | 4+ cores |

RAM | 2GB | 4GB+ |

Storage | 20GB | 50GB+ |

Network | Stable connectivity | High bandwidth |

What type of environment are you planning to use? Local development with Minikube, cloud-based clusters, or on-premises infrastructure? Each approach has distinct advantages and considerations for your Kubernetes setup journey.

Step 1: Choosing Your Kubernetes Setup Method

Multiple paths exist for implementing Kubernetes setup, each suited to different use cases and expertise levels:

Local Development Options:

- Minikube: Perfect for learning and testing

- Kind (Kubernetes in Docker): Lightweight local clusters

- Docker Desktop: Integrated Kubernetes support

Production-Ready Solutions:

- kubeadm: Manual cluster bootstrapping tool

- Managed Services: Amazon EKS, Google GKE, Azure AKS

Which option aligns with your current needs and long-term goals? Starting with a local setup often provides the best learning foundation before scaling to production environments.

Step 2: Installing Container Runtime

Kubernetes setup requires a container runtime as the foundation. Docker remains popular, but containerd and CRI-O offer alternative implementations.

For Docker installation on Ubuntu:

# Update package index

sudo apt-get update

# Install required packages

sudo apt-get install apt-transport-https ca-certificates curl software-properties-common

# Add Docker's official GPG key

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

# Set up stable repository

sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

# Install Docker CE

sudo apt-get update

sudo apt-get install docker-ce

Can you explain why container runtime forms such a critical component in Kubernetes setup? Understanding this relationship helps illuminate how Kubernetes orchestrates containers across your infrastructure.

Step 3: Kubernetes Cluster Initialization

With prerequisites satisfied, initiate your Kubernetes setup using kubeadm for a comprehensive learning experience:

# Initialize the cluster

sudo kubeadm init --pod-network-cidr=192.168.0.0/16

# Configure kubectl for regular user

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

This initialization process establishes your control plane and generates the necessary certificates and configuration files. What do you think happens during this initialization that makes it the cornerstone of successful Kubernetes setup?

Step 4: Network Plugin Configuration

Kubernetes setup remains incomplete without proper networking. Container Network Interface (CNI) plugins enable pod-to-pod communication across nodes.

Popular CNI options include:

- Calico: Feature-rich with network policies

- Flannel: Simple overlay network

- Weave Net: Easy setup with encryption

Installing Calico for robust networking:

# Apply Calico network plugin

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

# Verify installation

kubectl get pods -n kube-system

How might different networking requirements influence your CNI plugin choice? Consider factors like security policies, performance needs, and infrastructure complexity.

Step 5: Adding Worker Nodes

Expand your Kubernetes setup by joining worker nodes to the cluster:

# On master node, get join command

kubeadm token create --print-join-command

# On worker nodes, run the generated command

sudo kubeadm join <master-ip>:6443 --token <token> --discovery-token-ca-cert-hash <hash>

Verify node addition:

# Check cluster status

kubectl get nodes

# View detailed node information

kubectl describe nodes

Essential Kubernetes Setup Configurations

Resource Management and Limits

Proper resource allocation ensures stable cluster operation:

apiVersion: v1

kind: ResourceQuota

metadata:

name: compute-quota

spec:

hard:

requests.cpu: "1"

requests.memory: 1Gi

limits.cpu: "2"

limits.memory: 2Gi

Security Considerations

Kubernetes setup security involves multiple layers:

Security Layer | Implementation | Purpose |

RBAC | Role-based access control | User permissions |

Network Policies | Traffic restriction rules | Pod communication |

Pod Security | Security contexts | Container privileges |

Secrets Management | Encrypted data storage | Sensitive information |

What security challenges do you anticipate in your specific environment? Understanding potential vulnerabilities helps prioritize security measures during Kubernetes setup.

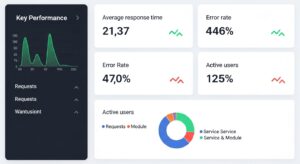

Set Up Kubernetes Monitoring and Observability Integration

Complete Kubernetes setup includes monitoring capabilities. Deploy essential observability tools:

Prometheus and Grafana Stack:

# Add Prometheus Helm repository

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

# Install Prometheus

helm install prometheus prometheus-community/kube-prometheus-stack

Consider implementing ELK Stack or Datadog for comprehensive logging and monitoring solutions.

Common Kubernetes Setup Challenges and Solutions

Understanding typical obstacles helps navigate your Kubernetes setup journey more effectively:

- DNS Resolution Issues: Often stem from incorrect cluster DNS configuration or network plugin problems. Verify CoreDNS pod status and network connectivity.

- Certificate Expiration: Kubernetes certificates have limited lifespans. Implement automated renewal processes or use managed services handling certificate management.

- Resource Exhaustion: Monitor cluster resources continuously. Implement horizontal pod autoscaling and cluster autoscaling for dynamic resource management.

How might you approach troubleshooting when your pods remain in pending state? Developing systematic debugging approaches proves invaluable for maintaining healthy Kubernetes environments.

Best Practices for Production Kubernetes Setup

Successful production deployment requires adherence to established best practices:

- High Availability Configuration: Deploy multiple master nodes across different availability zones. This ensures cluster resilience against individual node failures.

- Backup and Disaster Recovery: Implement regular etcd backups and practice restoration procedures. Document recovery processes thoroughly.

- Cluster Upgrades: Plan rolling upgrade strategies minimizing application downtime. Test upgrade procedures in staging environments first.

- Namespace Organization: Structure applications using logical namespace separation. This improves resource management and security isolation.

Advanced Kubernetes Setup Configurations

Custom Resource Definitions (CRDs)

Extend Kubernetes functionality through custom resources tailored to specific application needs:

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

name: applications.example.com

spec:

group: example.com

versions:

- name: v1

served: true

storage: true

Operators and Controllers

Implement custom controllers automating complex application lifecycle management. Popular frameworks include Kubebuilder and Operator SDK.

Integration with CI/CD Pipelines

Modern Kubernetes setup incorporates continuous integration and deployment workflows. Popular tools include:

- Jenkins X: Kubernetes-native CI/CD platform

- Tekton: Cloud-native pipeline framework

- ArgoCD: GitOps continuous delivery tool

- Flux: GitOps operator for Kubernetes

How might GitOps principles transform your application deployment approach? Exploring declarative deployment models often reveals more reliable and auditable release processes.

Performance Optimization and Scaling

Optimize your Kubernetes setup for varying workload demands:

- Horizontal Pod Autoscaler (HPA): Automatically scales pod replicas based on CPU utilization, memory usage, or custom metrics.

- Vertical Pod Autoscaler (VPA): Adjusts resource requests and limits for individual pods based on historical usage patterns.

- Cluster Autoscaler: Dynamically adds or removes nodes based on pending pod resource requirements.

Conclusion

Mastering Kubernetes setup opens doors to scalable, resilient container orchestration capabilities. This comprehensive approach—from understanding core architecture through implementing production-ready configurations—provides the foundation for successful container orchestration initiatives.

Kubernetes setup represents an iterative learning process. Start with simple local deployments, gradually incorporating advanced features as your understanding deepens. Each challenge encountered becomes an opportunity to strengthen your orchestration expertise.

What aspect of Kubernetes setup resonates most strongly with your current project needs? Whether focusing on security hardening, monitoring integration, or scalability planning, building upon these foundational concepts will accelerate your container orchestration journey.

The container orchestration landscape continues evolving rapidly. Stay engaged with the Kubernetes community, explore emerging patterns, and maintain curiosity about innovative solutions addressing complex distributed system challenges.