When you’re ready to deploy applications with Docker, you’re embarking on a journey that will fundamentally transform how you think about application delivery and infrastructure management. Docker containerization has revolutionized the way modern developers approach deployment challenges, offering unprecedented consistency and scalability.

But before we dive into the technical implementation, let me ask you this: What’s your current experience with containerization? Have you encountered deployment inconsistencies between your development and production environments? Understanding where you stand will help us tailor this learning journey to your specific needs.

Understanding Docker Deployment Fundamentals

Docker deployment represents a paradigm shift from traditional application deployment methods. Instead of installing applications directly on servers, we package everything needed to run an application into lightweight, portable containers.

Think of it this way: if traditional deployment is like moving to a new house and hoping all your furniture fits, Docker deployment is like having a perfectly designed, self-contained mobile home that works anywhere. The container includes your application code, runtime environment, system libraries, and dependencies—everything needed for consistent execution.

Why Deploy Applications with Docker

The power of deploying applications with Docker lies in solving the infamous “it works on my machine” problem. Consider these scenarios:

Your development team builds an application that works perfectly on their local machines, but when deployed to staging or production, mysterious errors appear. Database connections fail, libraries are missing, or configuration differences cause crashes. Sound familiar?

Docker eliminates these issues by ensuring your application runs in an identical environment everywhere. The same container that runs on your laptop will run identically on your production servers.

Step 1: Containerizing Your Application for Docker Deployment

Let’s explore how to prepare your application for Docker deployment. The first step involves creating a Dockerfile—your blueprint for building consistent application containers.

What type of application are you working with? A web application, API service, or database-driven application? The containerization approach varies slightly depending on your application architecture.

Creating an Effective Dockerfile

Here’s a practical example of containerizing a Node.js application:

FROM node:18-alpine

WORKDIR /app

COPY package*.json ./

RUN npm ci --only=production

COPY . .

EXPOSE 3000

CMD ["node", "server.js"]

Notice how this Dockerfile follows best practices:

- Uses a specific, lightweight base image

- Sets a working directory

- Copies dependency files first for better caching

- Installs dependencies before copying source code

- Exposes the necessary port

- Defines the startup command

Can you identify why we copy package*.json files before copying the entire application? This optimization leverages Docker’s layer caching mechanism—if dependencies haven’t changed, Docker reuses the cached layer, significantly speeding up subsequent builds.

Step 2: Building and Testing Docker Images

Building Docker images for deployment requires attention to optimization and security. The docker build command transforms your Dockerfile into an executable image:

docker build -t your-app:latest .

But effective Docker deployment goes beyond basic building. Consider these optimization strategies:

Multi-stage Builds for Production Deployment

Multi-stage builds dramatically reduce image size and improve security by separating build dependencies from runtime requirements:

# Build stage

FROM node:18 AS builder

WORKDIR /app

COPY package*.json ./

RUN npm ci

COPY . .

RUN npm run build

# Production stage

FROM node:18-alpine

WORKDIR /app

COPY package*.json ./

RUN npm ci --only=production

COPY --from=builder /app/dist ./dist

CMD ["node", "dist/server.js"]

What advantages do you see in this approach? The final image contains only production dependencies and compiled code, resulting in smaller, more secure containers.

Testing Your Docker Images

Before deployment, thorough testing ensures your containerized application behaves correctly:

# Run container locally for testing

docker run -d -p 3000:3000 --name test-app your-app:latest

# Check container logs

docker logs test-app

# Execute commands inside running container

docker exec -it test-app /bin/sh

How might you verify that your application is responding correctly within the container? Testing should include functionality verification, performance validation, and security scanning.

Step 3: Docker Deployment Strategies and Orchestration

Deploying applications with Docker in production environments requires sophisticated orchestration strategies. Single-container deployments rarely suffice for real-world applications.

Container Orchestration Options

Modern Docker deployment typically involves orchestration platforms that manage multiple containers across distributed infrastructure:

Docker Compose suits development and small-scale production deployments:

version: '3.8'

services:

web:

build: .

ports:

- "3000:3000"

environment:

- NODE_ENV=production

depends_on:

- database

database:

image: postgres:15

environment:

POSTGRES_DB: myapp

POSTGRES_USER: user

POSTGRES_PASSWORD: password

volumes:

- pgdata:/var/lib/postgresql/data

volumes:

pgdata:

Kubernetes provides enterprise-grade orchestration for complex Docker deployments:

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-deployment

spec:

replicas: 3

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: app

image: your-app:latest

ports:

- containerPort: 3000

Which orchestration approach aligns with your infrastructure requirements? Consider factors like team size, scalability needs, and operational complexity when choosing between Docker Compose, Kubernetes, or cloud-native solutions.

Step 4: Production Docker Deployment Best Practices

Production Docker deployment demands adherence to security, monitoring, and reliability best practices. Let’s explore critical considerations for robust application deployment.

Security Hardening for Docker Deployment

Security begins with your base images and extends through your entire deployment pipeline:

Use minimal base images:

FROM alpine:3.18

RUN apk add --no-cache nodejs npm

Run containers as non-root users:

RUN addgroup -g 1001 -S nodejs

RUN adduser -S nodejs -u 1001

USER nodejs

Implement resource constraints:

services:

web:

deploy:

resources:

limits:

cpus: '0.5'

memory: 512M

How do these security measures protect your deployed applications? Each practice reduces attack surfaces and limits potential damage from security breaches.

Health Checks and Monitoring

Effective Docker deployment includes comprehensive health monitoring:

HEALTHCHECK --interval=30s --timeout=3s --start-period=40s --retries=3 \

CMD curl -f http://localhost:3000/health || exit 1

services:

web:

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:3000/health"]

interval: 30s

timeout: 10s

retries: 3

What information should your health check endpoints provide? Consider including database connectivity, external service availability, and application-specific metrics.

Step 5: Advanced Docker Deployment Patterns

As your Docker deployment expertise grows, advanced patterns become essential for complex applications and high-availability requirements.

Blue-Green Deployment with Docker

Blue-green deployment enables zero-downtime updates by maintaining two identical production environments:

# Deploy new version to green environment

docker-compose -f docker-compose.green.yml up -d

# Test green environment

curl http://green-environment.example.com/health

# Switch traffic from blue to green

# (typically done via load balancer configuration)

# Cleanup old blue environment

docker-compose -f docker-compose.blue.yml down

Rolling Updates and Canary Deployments

Modern container orchestration platforms support sophisticated deployment strategies:

Kubernetes Rolling Update:

spec:

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

Canary Deployment Configuration:

spec:

replicas: 10

strategy:

canary:

steps:

- setWeight: 10

- pause: {duration: 600}

- setWeight: 50

- pause: {duration: 600}

How might you implement automated rollbacks if canary deployments detect issues? Consider integrating monitoring metrics and automated decision-making into your deployment pipeline.

Docker Deployment Performance Optimization

Optimizing Docker deployment performance involves multiple layers, from image construction to runtime configuration.

Image Optimization Strategies

Technique | Description | Impact |

Multi-stage builds | Separate build and runtime environments | 50-80% size reduction |

Layer caching | Optimize Dockerfile instruction order | 2-5x faster builds |

Base image selection | Choose minimal, purpose-built images | Improved security and performance |

Dependency management | Remove unnecessary packages | Reduced attack surface |

Runtime Performance Tuning

Docker deployment performance depends heavily on resource allocation and container configuration:

services:

web:

deploy:

resources:

limits:

cpus: '2'

memory: 2G

reservations:

cpus: '1'

memory: 1G

ulimits:

nofile:

soft: 20000

hard: 40000

Consider your application’s resource requirements. How do CPU and memory constraints affect your deployment strategy? Proper resource allocation prevents resource contention and ensures predictable performance.

Monitoring and Logging Docker Deployments

Comprehensive monitoring transforms Docker deployment from a one-time activity into an ongoing operational capability.

Centralized Logging Solutions

Docker deployment generates logs across multiple containers and services. Centralized logging aggregates this information for analysis:

services:

web:

logging:

driver: "json-file"

options:

max-size: "10m"

max-file: "3"

Popular logging solutions for Docker deployment include:

- ELK Stack (Elasticsearch, Logstash, Kibana) for comprehensive log analysis

- Fluentd for flexible log collection and forwarding

- Grafana Loki for efficient log aggregation and querying

External resources for advanced logging configurations:

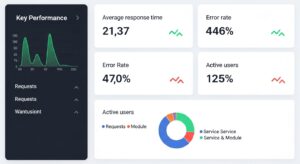

Application Performance Monitoring

Monitoring Docker deployments requires visibility into both container metrics and application performance:

services:

prometheus:

image: prom/prometheus

ports:

- "9090:9090"

volumes:

- ./prometheus.yml:/etc/prometheus/prometheus.yml

grafana:

image: grafana/grafana

ports:

- "3000:3000"

What metrics are most critical for your Docker deployment monitoring strategy? Consider tracking container resource utilization, application response times, error rates, and business-specific indicators.

Troubleshooting Common Docker Deployment Issues

Even well-planned Docker deployments encounter challenges. Understanding common issues and their solutions accelerates problem resolution.

Container Startup Failures

When containers fail to start during Docker deployment, systematic troubleshooting reveals root causes:

# Check container logs for startup errors

docker logs container-name

# Inspect container configuration

docker inspect container-name

# Debug by running container interactively

docker run -it --entrypoint /bin/sh image-name

Common startup issues include:

- Missing environment variables

- Incorrect file permissions

- Port binding conflicts

- Resource constraints

Network Connectivity Problems

Docker deployment networking can be complex, especially in orchestrated environments:

# Test container network connectivity

docker exec container-name ping external-service

# Inspect Docker networks

docker network ls

docker network inspect bridge

How do you approach network debugging in containerized environments? Understanding Docker’s networking model helps identify connectivity issues between containers and external services.

Future-Proofing Your Docker Deployment Strategy

Technology evolution requires adaptive Docker deployment approaches that accommodate changing requirements and emerging technologies.

Emerging Trends in Container Deployment

Consider how these trends might influence your Docker deployment strategy:

- Serverless Containers: Platforms like AWS Fargate and Google Cloud Run abstract infrastructure management while maintaining container benefits.

- Edge Computing: Deploying applications with Docker at edge locations reduces latency and improves user experience.

- GitOps: Infrastructure-as-code approaches integrate Docker deployment with version control workflows.

What aspects of your current deployment process would benefit from these emerging approaches? Evaluate how new technologies align with your organizational objectives and technical requirements.

Building Deployment Automation

Mature Docker deployment strategies emphasize automation and repeatability:

# CI/CD Pipeline Example

stages:

- build

- test

- security-scan

- deploy

build:

script:

- docker build -t $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA .

- docker push $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA

deploy:

script:

- kubectl set image deployment/app app=$CI_REGISTRY_IMAGE:$CI_COMMIT_SHA

- kubectl rollout status deployment/app

How might you implement automated rollbacks and deployment validation in your pipeline? Robust automation reduces manual errors and enables faster, more reliable deployments.

Conclusion

Deploying applications with Docker transforms software delivery from a complex, error-prone process into a streamlined, repeatable operation. Through containerization, orchestration, and best practices implementation, Docker deployment enables organizations to achieve unprecedented consistency and scalability.

The journey from basic containerization to sophisticated production deployment requires continuous learning and adaptation. As you implement these strategies, consider how each technique addresses your specific challenges and organizational objectives.

What’s your next step in mastering Docker deployment? Whether you’re implementing your first containerized application or optimizing existing deployments, the principles and practices outlined in this guide provide a foundation for success.

Effective Docker deployment combines technical expertise with operational excellence. Start with simple implementations, gradually incorporating advanced patterns as your confidence and requirements grow. The investment in learning Docker deployment pays dividends through improved reliability, faster deployments, and enhanced scalability.