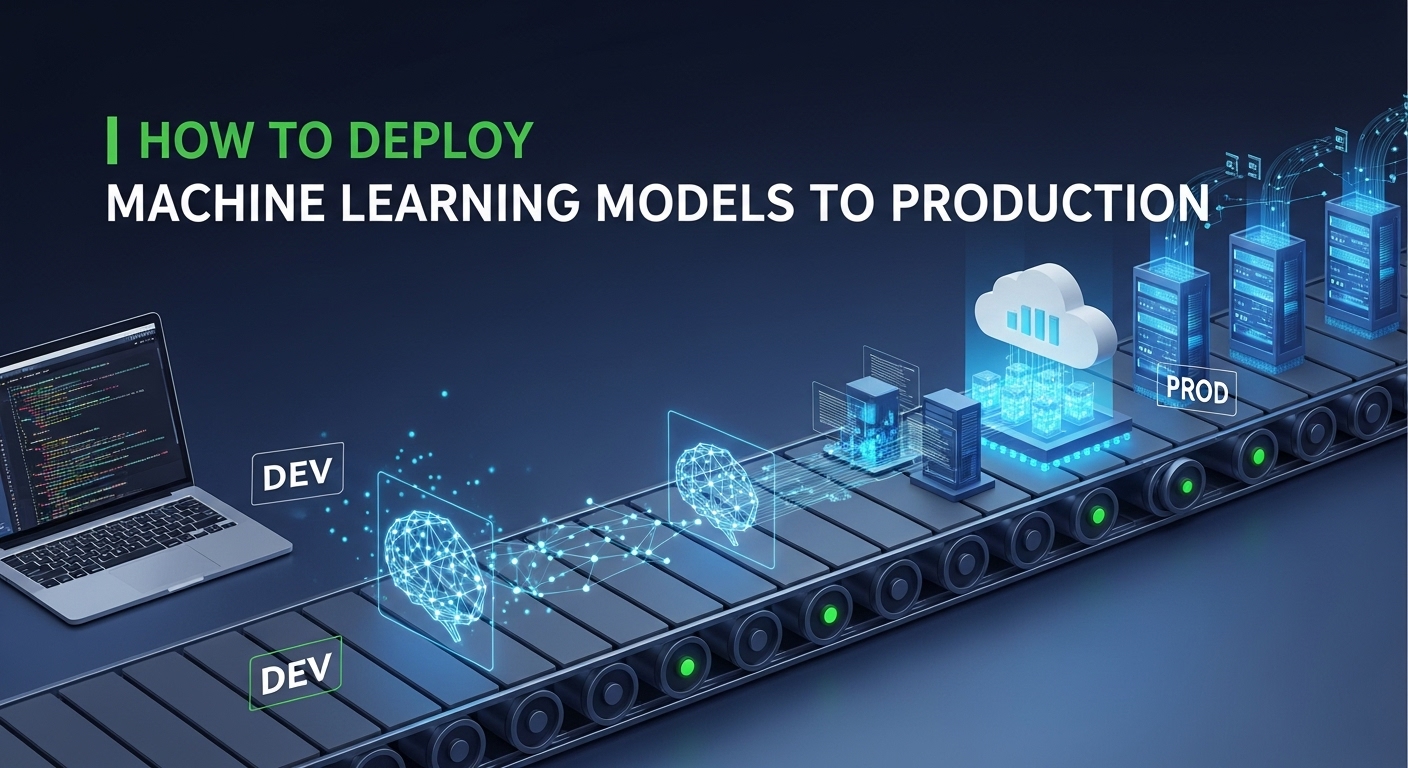

Building a machine learning model is only half the battle. The real challenge begins when you need to deploy machine learning models to production environments where they can deliver actual business value. Many data scientists excel at creating accurate models but struggle with the complexities of production deployment.

This comprehensive guide will walk you through the essential steps, best practices, and common pitfalls to avoid when transitioning your ML models from development to production.

Understanding Machine Learning Model Deployment

Machine learning model deployment refers to the process of making your trained model available in a production environment where it can make predictions on new, real-world data. Unlike traditional software deployment, ML deployment involves unique challenges such as data drift, model versioning, and performance monitoring.

When you deploy machine learning models, you’re essentially creating a bridge between your experimental work and practical business applications. This process requires careful planning, robust infrastructure, and ongoing maintenance to ensure optimal performance.

Step 1: Prepare Your Model for Production

Before you can successfully deploy machine learning models, thorough preparation is essential. This involves several critical components that ensure your model is production-ready.

Model Validation and Testing

Your model must undergo rigorous testing beyond standard accuracy metrics. Implement comprehensive validation procedures including:

- Cross-validation on multiple datasets

- Edge case testing with unusual input scenarios

- Performance benchmarking under expected load conditions

- Security vulnerability assessments

Code Refactoring and Optimization

Development code rarely meets production standards. Refactor your model code to improve:

- Performance efficiency and speed

- Memory usage optimization

- Error handling and logging capabilities

- Code maintainability and documentation

For detailed best practices on code optimization, refer to Google’s ML Engineering best practices.

Step 2: Choose Your Deployment Strategy

Several deployment strategies exist for ML models, each with distinct advantages and use cases. Understanding these options is crucial when you deploy machine learning models.

Deployment Strategy | Best For | Pros | Cons |

Batch Processing | Large-scale predictions | Cost-effective, handles big data | Not real-time, scheduling complexity |

Real-time API | Interactive applications | Immediate responses, scalable | Higher infrastructure costs |

Edge Deployment | Mobile/IoT devices | Low latency, offline capability | Limited computational resources |

Streaming | Continuous data flows | Real-time processing | Complex infrastructure setup |

Real-time API Deployment

This approach involves creating RESTful APIs that serve predictions on-demand. It’s ideal for applications requiring immediate responses, such as recommendation systems or fraud detection.

Batch Processing

Suitable for scenarios where predictions don’t need immediate results. This method processes large datasets periodically, making it cost-effective for many business applications.

Step 3: Select the Right Infrastructure

Infrastructure choice significantly impacts how successfully you deploy machine learning models. Consider these popular options:

Cloud Platforms

Major cloud providers offer specialized ML deployment services:

- Amazon SageMaker: End-to-end ML platform with built-in deployment capabilities

- Google Cloud AI Platform: Integrated ML services with automatic scaling

- Microsoft Azure ML: Comprehensive MLOps platform with enterprise features

Containerization with Docker

Docker containers provide consistency across different environments. Benefits include:

- Environment reproducibility

- Easier dependency management

- Simplified scaling and deployment

- Better resource isolation

Kubernetes for Orchestration

For complex deployments, Kubernetes offers powerful orchestration capabilities, enabling automated scaling, load balancing, and fault tolerance.

Learn more about container orchestration at the official Kubernetes documentation.

Step 4: Implement Model Versioning and Management

Effective model management becomes critical as you deploy machine learning models at scale. Implement robust versioning systems to track:

Version Control Systems

Use specialized ML versioning tools such as:

- MLflow: Open-source platform for ML lifecycle management

- DVC (Data Version Control): Git-like versioning for ML projects

- Weights & Biases: Experiment tracking and model management

Model Registry

Maintain a centralized model registry that tracks:

- Model performance metrics

- Training data characteristics

- Deployment history and rollback capabilities

- Model lineage and dependencies

Step 5: Set Up Monitoring and Logging

Continuous monitoring is essential for maintaining deployed models. Unlike traditional software, ML models can degrade over time due to data drift and changing patterns.

Performance Monitoring

Track key metrics including:

- Prediction accuracy and model performance

- Response time and throughput

- Resource utilization (CPU, memory, storage)

- Error rates and failure patterns

Data Drift Detection

Implement systems to detect when incoming data differs significantly from training data. This helps identify when model retraining becomes necessary.

Logging Infrastructure

Comprehensive logging should capture:

- All prediction requests and responses

- Model performance metrics over time

- System errors and exceptions

- Security-related events and access patterns

For advanced monitoring strategies, explore Prometheus monitoring solutions.

Step 6: Ensure Security and Compliance

Security considerations are paramount when you deploy machine learning models, especially in regulated industries.

Data Protection

Implement robust data protection measures:

- Encryption for data in transit and at rest

- Access controls and authentication mechanisms

- Data anonymization and privacy preservation

- Secure API endpoints with proper authentication

Compliance Requirements

Consider relevant regulations such as:

- GDPR for European data protection

- HIPAA for healthcare applications

- SOX for financial services

- Industry-specific compliance standards

Step 7: Plan for Maintenance and Updates

Successful ML deployment requires ongoing maintenance and continuous improvement strategies.

Automated Retraining Pipelines

Establish automated systems that:

- Monitor model performance degradation

- Trigger retraining when performance drops below thresholds

- Validate new model versions before deployment

- Implement gradual rollout strategies for model updates

A/B Testing Framework

Implement A/B testing capabilities to:

- Compare different model versions safely

- Measure business impact of model changes

- Make data-driven decisions about model updates

- Minimize risks during model transitions

Common Challenges and Solutions

When organizations deploy machine learning models, they frequently encounter specific challenges. Understanding these issues helps ensure smoother deployments.

- Model Drift: Models lose accuracy over time as real-world data changes. Solution: Implement automated drift detection and establish retraining schedules based on performance thresholds.

- Scalability Issues: Traffic spikes can overwhelm deployed models. Solution: Use auto-scaling infrastructure and implement proper load balancing strategies.

- Integration Complexity: ML models must integrate with existing business systems. Solution: Design clean APIs and establish clear data contracts between systems.

Best Practices for ML Model Deployment

Following these best practices will improve your success rate when you deploy machine learning models:

- Start Simple: Begin with basic deployment strategies before implementing complex solutions. Prove value with minimal viable deployments, then iterate and improve.

- Implement Gradual Rollouts: Use canary deployments or blue-green strategies to minimize risks. Test new models on small traffic percentages before full deployment.

- Document Everything: Maintain comprehensive documentation covering deployment procedures, model specifications, and troubleshooting guides.

- Plan for Rollbacks: Always have rollback procedures ready. Quick rollback capabilities are essential when issues arise in production.

Measuring Deployment Success

Success metrics for ML deployment extend beyond traditional software metrics. Track both technical and business KPIs:

Technical Metrics

- Model accuracy and precision in production

- System uptime and availability

- Response time and latency

- Resource utilization efficiency

Business Metrics

- Impact on key business outcomes

- Cost savings or revenue generation

- User satisfaction and engagement

- Return on investment from ML initiatives

Future Trends in ML Deployment

The landscape of ML deployment continues evolving rapidly. Stay informed about emerging trends:

- MLOps Maturity: Organizations are developing more sophisticated MLOps practices, treating ML deployment with the same rigor as traditional software development.

- Edge AI Growth: Increased deployment of models directly on edge devices for reduced latency and improved privacy.

- AutoML Integration: Automated machine learning tools are making deployment more accessible to non-experts.

For the latest trends in ML deployment, check resources at MLOps Community.

Conclusion

Successfully learning how to deploy machine learning models to production requires careful planning, robust infrastructure, and ongoing maintenance. By following the seven essential steps outlined in this guide, organizations can bridge the gap between experimental models and production value.

Deployment is not a one-time event but an ongoing process requiring continuous monitoring, updates, and improvements. Start with simple deployments, learn from experience, and gradually implement more sophisticated strategies as your MLOps maturity grows.

The investment in proper ML deployment practices pays dividends through reliable, scalable, and maintainable production systems that deliver real business value from your machine learning initiatives.